So Your School is Thinking About Adopting AI Solutions? Here’s what you should be looking for.

SEPTEMBER 23, 2023

HUDSON HARPER, Education Data Strategist/Consultant

New AI tools and platforms claiming to make teachers’ and students’ lives easier are emerging all over the place as schools are starting to explore the use of AI for everything from classroom instruction to personal tutoring to responding to parent emails to business office applications. It’s tempting to start subscribing to as many services as you can to test and use them. After all, the promises are quite amazing! AI will revolutionize the education!

A (Near) Impossible Problem

Unfortunately, adapting technology (of any kind) is never that simple. Even before AI, EdTech companies have faced scrutiny for varied levels of protections when it comes to student privacy and data. As EdSurge highlighted earlier this year, the number of EdTech tools used by schools has grown above 1,400 of which many have become targets of a growing trend of cyber-attacks in the education sector.

Ensuring that students are protected by the services being used for school is one of the most important and most difficult tasks school technology departments face. Adding AI into the mix makes this job even more difficult as the concerns around data privacy are even more nuanced than simply what data is accessible through 3rd party applications and vendors and less understood.

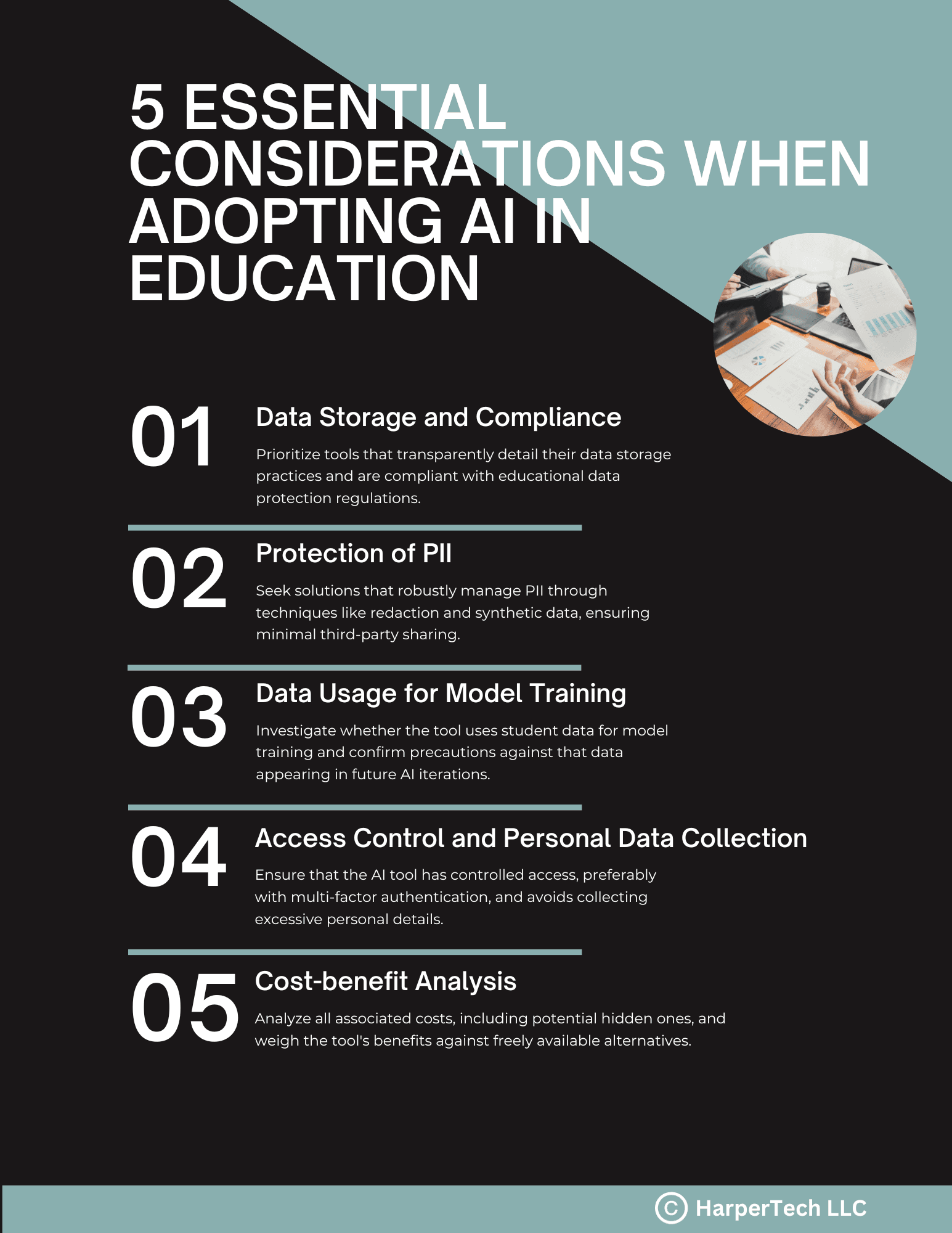

So what does a school need to consider as they adapt AI-powered applications and technologies? To help schools answer this question, I’ve put together the following list of questions to consider as they explore AI tools.

Data Storage and Compliance

Given a schools top priority to protect students and their data, it shouldn’t be a surprise that the first thing I’d suggest considering is the security of user data.

- Storage: Where and how is the data stored? Is it in a cloud-based system or locally? How transparent is the company behind the tool when it comes to data storage?

- Regulatory Compliance: Does the AI tool comply with relevant educational data protection regulations and laws that schools are bound to? (For instance, in the U.S., this could refer to FERPA, COPPA, and CCPA regulations.)

- Retention and Auditing: How long is the data retained? Is there a mechanism to review or audit the data storage practices? For example, ChatGPT by default retains data for 30 days unless a specific business arrangement has been made to implement a 0 day retention policy. However, there are currently no mechanisms for a school to audit usage of ChatGPT by students, teachers, or admins.

Protection of Personally Identifiable Information (PII)

Protecting PII is a common concern when it comes to cyber-security, but AI has its own concerns as PII can appear unexpectedly through the use of large language models (LLMs) depending on whether or not an AI has been trained on PII without proper safeguards.

- Handling of PII: How is PII managed? Are there multiple levels of data protection in place? Things to look for are mentions of redaction using natural language processing or better yet, synthetic data (if the model is being trained). What isn’t acceptable (in my opinion) is any mention of human intervention to reduce the use or proliferation of PII.

- Exposure: To whom or what (like AI models) is PII exposed? Is it shared with third-party vendors, or is it kept strictly in-house? For example, Poe AI’s data & privacy policy states that third party bot creators may review user data and prompts to refine use of their tools. This becomes a secondary risk with using AI that is even harder to keep track of.

Data Usage for Model Training

Beyond the concern of PII being proliferated through an LLM, there are also concerns related to the sharing of intellectual property, copyright, and sensitive information inadvertently.

- Training Models: Is user data used to train the AI models? If so, is there clear transparency on this usage? For instance, ChatGPT uses all user prompts and output along with any user feedback to train OpenAI’s models. On the other hand, OpenAI’s API gives access to the same models without using the same information for training purposes (though at a cost). Something to look for are similar statements about whether or not user data is used for training purposes. Warning though, a company saying they are using an API isn’t a guarantee that the creator of the tool isn’t refining their use of the API with user data.

- Future Implications: What mechanisms are in place to ensure that user data doesn’t inadvertently surface in subsequent iterations or applications of the AI?

Access Control and Personal Data Collection

One of the first problems a school may run into while asking students to experiment with AI is the fact that many students simply can’t access AI tools.

- Access Levels: How do different user groups (students, teachers, and administrators) access the AI tool? Is there multi-factor authentication or Single Sign-On (SSO) in place?

- Personal Data Collection: What personal details are necessary to access and use the tool? Does it require potentially sensitive information like phone numbers, and if so, why? For many schools this may be problematic as students either don’t have mobile phone numbers to use or don’t consent to giving this information away.

Cost-benefit Analysis

Finally, something that needs to be acknowledge is that scalable adoption of AI as an organization is not cheap! Unlike other tools, the underlying cost of each use of AI is (in my opinion) painfully obvious. For every 750 words of text you enter or receive by interacting with an AI, you’re incurring a cost (whether you or the company is paying for it) of at least $0.001. While that doesn’t seem like a lot, this cost adds up very quickly. Throw in protections for thing like privacy, compliance, the ability to audit, access control, etc. (all of which I’ve hopefully convinced you is important), and the cost gets quite high.

- Cost: How much does the AI tool cost, including potential hidden costs or those associated with data breaches? Many of these emerging products are not cheap and are cost-prohibitive. Charging $10/user/month (or even as high as $35/user/month from what I’ve seen) is simply not financially accessible for many schools.

- Comparison with Freely Available Tools: Are there free tools available (like ChatGPT or Poe) that could serve the same or similar purposes? Does this AI solution offer advantages that justify its cost?

Conclusion

Embracing new technologies, especially ones as transformative as AI, is undeniably thrilling for the education sector. The potential for personalized learning, streamlined administrative tasks, and interactive educational experiences is unparalleled. However, as with any innovation, AI also brings its own unique set of challenges, primarily centered around data privacy, access, and cost.

As educators and decision-makers, our primary responsibility is the welfare of our students. This entails ensuring their personal data is protected, the tools they access are equitable and safe, and the investments made in technology provide genuine value to their learning experiences. The questions outlined above serve as a roadmap to navigate this intricate landscape, but they are just the starting point. As AI evolves, so too should our understanding and criteria for evaluating it.

Before diving headlong into this new era, schools must weigh the costs and benefits, understand the nuances of AI, and be prepared to adapt. With thorough due diligence and a commitment to placing students' interests at the forefront, the integration of AI into education can be both revolutionary and responsible.

Remember, AI is not just a tool—it’s an evolving entity. Approach its integration with caution, curiosity, and a dedication to continual learning.